Doug Kerr

Well-known member

In various areas of photographic shot and image delivery planning we consider the visual acuity of the human observer.

For example, in doping depth of field planning, under what I call "outlook A", we seek to have all significant scene features confined to a range of distances such that the blurring they suffer due to not being at the distance at which the camera is focused will not be be "significant" to the human viewer, in the image viewing context we have in mind.

If we seek to work out how this depth of field analysis should be done, we must then determine what that metric of human visual acuity is.

We quickly find it said that the acuity of the human eye "with normal vision" is one minute of arc (1/60°). But what exactly does that mean?

We soon are ensnared in the familiar 2:1 ambiguity that infests the entire area of "resolution" (lines vs. line pairs, etc.)

Often we hear it said that the human eye with normal vision can " resolve two lines separated by one minute of arc". What does that mean? It is normally taken to mean that if we have two black lines on a white background, and the center-to-center distance between the lines as viewed is one minute of arc, then the "normal vision" eye can just barely recognize them as distinct.

Is that a correct interpretation? Probably not. Is that an accurate statement? I doubt it.

If we follow the trail of the definition of normal vision as tested with the classical Snellen eye chart, we find this matter very clearly defined in the literature (although I will express it here in my own words, which most directly relate to our outlook):

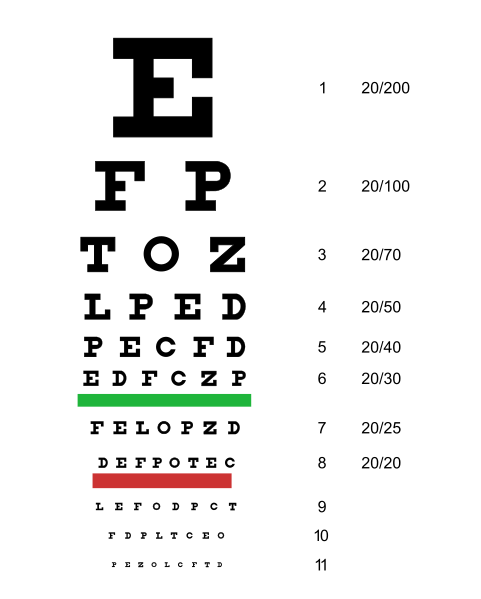

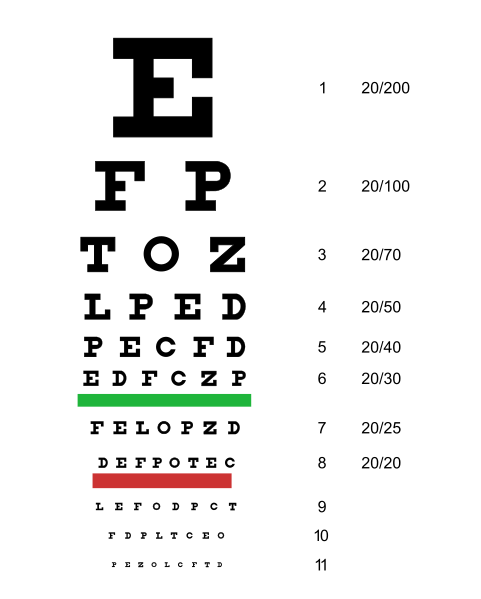

The characters (optotypes) on the modern Snellen chart are "Sloan letters" (after the typeface designer), and (except for the curved outlines and diagonal strokes) we would describe them as being defined on a 5 pixel high grid.

Normal vision is defined as the subject being able to correctly identify five out of six Snellen optotypes when their total angular height as seen by the subject is five minutes of arc; that is, when the vertical "pixel pitch", or "pixel size", of the optotypes is one minute of arc.

A person that can at best distinguish, at a distance of 20 feet/6 m, the row of optotypes with the green bar below is said to have 20/30 (6/9) acuity.

The literal meaning of the notation is that this subject can distinguish, at a distance of 20 feet (6 m), the optotypes a person with "normal" vision can distinguish at a distance of 30 feet (9 m).

The implication (in our terms) of an acuity stated as "20/30" is that the subject can recognize optotypes that vertically subtend 7.5 minutes of arc.

Best regards,

Doug

For example, in doping depth of field planning, under what I call "outlook A", we seek to have all significant scene features confined to a range of distances such that the blurring they suffer due to not being at the distance at which the camera is focused will not be be "significant" to the human viewer, in the image viewing context we have in mind.

If we have no specific viewing context in kind, but are doing this planning in a "generic" way, we may then presume a certain "standard" image viewing context.

Simplistically, we may define that criterion of "blurring not significant to the viewer" as when the blur figure from misfocus is of comparable dimension to the blur figure that characterizes human visual acuity.If we seek to work out how this depth of field analysis should be done, we must then determine what that metric of human visual acuity is.

We quickly find it said that the acuity of the human eye "with normal vision" is one minute of arc (1/60°). But what exactly does that mean?

We soon are ensnared in the familiar 2:1 ambiguity that infests the entire area of "resolution" (lines vs. line pairs, etc.)

Often we hear it said that the human eye with normal vision can " resolve two lines separated by one minute of arc". What does that mean? It is normally taken to mean that if we have two black lines on a white background, and the center-to-center distance between the lines as viewed is one minute of arc, then the "normal vision" eye can just barely recognize them as distinct.

Is that a correct interpretation? Probably not. Is that an accurate statement? I doubt it.

If we follow the trail of the definition of normal vision as tested with the classical Snellen eye chart, we find this matter very clearly defined in the literature (although I will express it here in my own words, which most directly relate to our outlook):

The characters (optotypes) on the modern Snellen chart are "Sloan letters" (after the typeface designer), and (except for the curved outlines and diagonal strokes) we would describe them as being defined on a 5 pixel high grid.

Normal vision is defined as the subject being able to correctly identify five out of six Snellen optotypes when their total angular height as seen by the subject is five minutes of arc; that is, when the vertical "pixel pitch", or "pixel size", of the optotypes is one minute of arc.

The "normal vision" line of optotypes on the Snellen chart (the one with the red bar under it) will subtend vertically five minutes of arc at a viewing distance of 20 feet/6 m.

This "normal" visual acuity is said to have a "Snellen fraction" of 1.0; this is usually stated when working in the context of "inch" units as "20/20; when working in the context of SI units, as "6/6". A person that can at best distinguish, at a distance of 20 feet/6 m, the row of optotypes with the green bar below is said to have 20/30 (6/9) acuity.

The literal meaning of the notation is that this subject can distinguish, at a distance of 20 feet (6 m), the optotypes a person with "normal" vision can distinguish at a distance of 30 feet (9 m).

The implication (in our terms) of an acuity stated as "20/30" is that the subject can recognize optotypes that vertically subtend 7.5 minutes of arc.

Best regards,

Doug